Code-free, Cookie-free, Ready-to-Use Product Onboarding Tool

Code-free

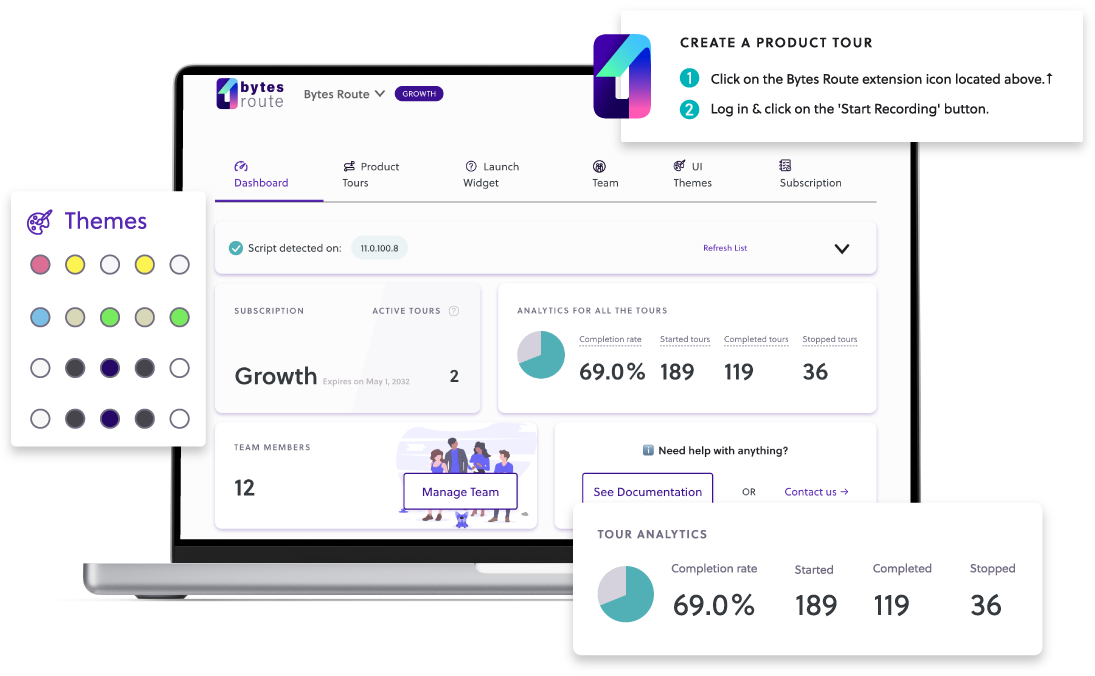

Of course, Bytes Route is a software product but you do not need to have any coding skills. (If you have coding skills- we thought about you too).

Cookie-free

Yes, that’s right – no strings attached, just onboarding. You can forget about the cookie policy updates, GDPR, CCPA, and other privacy data regulations. Bytes Route doesn’t store any user data. Just usage data.

Ready-to-use

That means you can use it as it is. No interventions, no code writing. It just works. And, yes, we will have product tours templates available.